UK police working with controversial tech giant Palantir on real-time surveillance network

Published on 16 June 2025

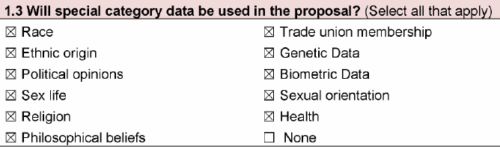

The Nectar project offers 'advanced data analysis' using a wide range of sensitive personal information

Reports Mark Wilding for Liberty Investigates and Cahal Milmo for the i.